Disrupting Industries with Data Science: Machine Learning as the Key to Disruptive Innovation

Develop actionable innovation strategies by harnessing the power of advanced machine learning techniques such as RoBERTa, BART, LLM, & LDA

Introduction

Are you someone who gets excited about disruptive innovation? Do you wonder how charismatic innovators and leaders disrupt well-established industries? Do you often think about processes and frameworks for innovation?

In a world where change is the only constant, the ability to innovate and disrupt has become crucial for businesses to thrive. Throughout history, there have been pivotal moments where innovation not only changed industries but also altered the way we live. Let’s consider Airbnb, which didn’t just shake up the hotel industry, it rewrote the rules of hospitality, turning any home into a potential hotel. Then there’s Netflix, it changed the way we consume entertainment. Amazon started as an online bookstore and has completely changed how we shop. Before Google, it’s hard to imagine how we ever searched for information online. Uber has turned the taxi industry upside down. Ford, much earlier, had a similar impact with its assembly line, changing not just car manufacturing, but how products are made worldwide. The digital camera and smartphones merged photography with communication, making traditional cameras and even separate phones obsolete.

Each innovation is a story of transformation, showing how bold ideas can lead to seismic shifts in our daily lives and entire industries. But what really lies behind this phenomenon? Is it sheer brilliance, genius creativity, calculated strategy, or a blend of all these things? I guess it’s a combination of all these things but let us dive deeper into how you can harness the power of Data Science and Machine learning as key to drive innovation.

Framework for identifying disruption opportunities

Innovation can be categorized in various ways, but the three main categories are:

Incremental Innovation: This type of innovation involves making small improvements to existing products, or services. The changes are typically minor and aim to enhance user experience or one or more of product attributes like performance, security, efficiency etc. without radically altering the status quo. This is the most common innovation we see around us.

Disruptive Innovation: Coined by Clayton Christensen (I am a big fan of his work), it refers to an innovation that significantly alters the way a market or industry functions. It starts with a product or service that initially targets a small, and often overlooked customer segment (under served or over served segment) but eventually moves upmarket, displacing established competitors.

Radical Innovation: This introduces a fundamentally new concept, product, or service that has a significant impact on the market. It often involves breakthrough technologies or novel ideas that can create new industries or render existing ones obsolete.

To spot opportunities for disruptive or radical innovation, start by identifying customer segments that are underserved or overlooked by current market leaders. Focus on the critical product or service attributes valued by these segments, particularly those poorly addressed by incumbents. Now you have identified customer segments and their unmet needs. Next step is to come up with products or services that serve this customer segment and fulfills their unmet needs. The key often lies in rethinking the business model and value chain to build out distinctive products and services which are hard to copy by competition.

To understand this in more detail I highly recommend checking out these two books – The innovator’s Dilemma by Clayton Christensen and Seizing the White Space: Business Model Innovation for Growth and Renewal by Mark W. Johnson. These insightful books provide a thorough framework for analyzing and uncovering opportunities for innovation and disruption.

I also cover this topic in a bit more detail in my previous blog Develop User Empathy and Craft Better Products: Using Machine Learning to Analyze User Feedback via Clustering & Sentiment Analysis.

Why data science is the secret to business disruption

Disruptive innovation hinges on a deep understanding of user needs, achieved through empathy and thorough research. This process entails uncovering new insights using a blend of qualitative and quantitative research methods, all the while minimizing biases. Machine Learning (ML) algorithms play a critical role in identifying these opportunities for innovation and disruption. Advanced ML algorithms excel in detecting complex patterns and correlations within data, crucial for deciphering consumer behaviors and the motivations behind them. This pattern recognition extends beyond quantitative data to qualitative inputs like customer reviews and interviews.

Traditional analytical approaches, though effective for quantitative data, often fall short when applied to qualitative data such as customer reviews. Here, advanced neural network-based transformer models are extremely useful. These models excel at interpreting the subtleties of human language, thereby providing more accurate sentiment analysis than conventional methods.

By combining design thinking, quantitative research, and machine learning we can deepen our understanding of users. ML techniques help us address the challenges of biases, time constraints, and scalability inherent in qualitative user research. Combining these techniques effectively enables the creation of more user-centric products, informed by a nuanced understanding of consumer needs and preferences. This approach not only aligns with best practices in product development but also enhances our ability to innovate in ways that truly resonate with users.

Now let’s go through an example of applying machine learning to uncover user needs and come up with a new business model for disruption. I will use customer reviews for airlines as a data set to demonstrate this process but in real life this process can be adopted to a lot of different and niche applications. Let’s dive into the fun stuff.

Leveraging machine learning to identity opportunities for disruptive innovation

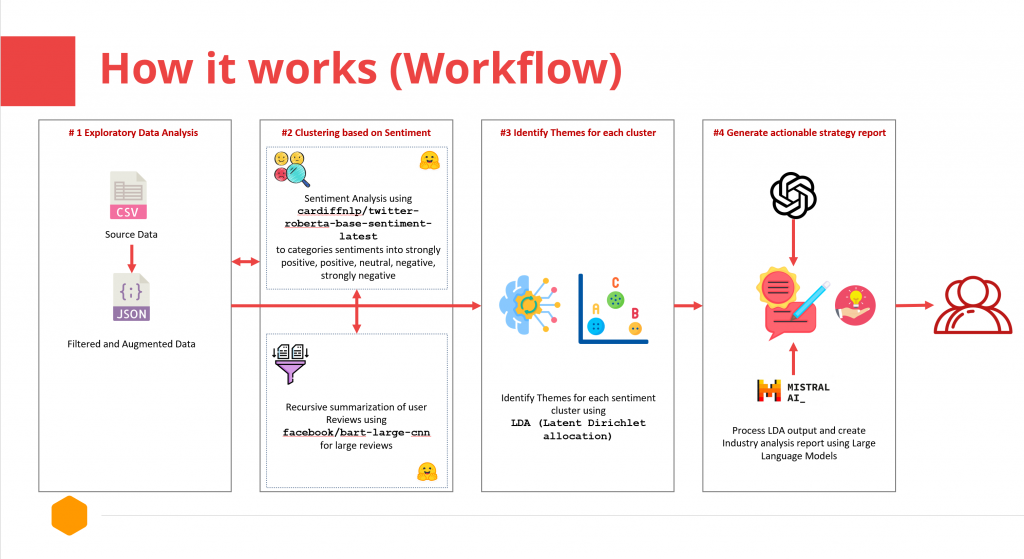

How it works: High level system design and workflow

There are four main steps involved in this analysis. The first one is to understand the data and select the attributes for further analysis, in this case I am only using user reviews but for other real world applications this would involve domain knowledge and analysis of attributes. In next step I am using Transformer models like BART (Bidirectional and Auto-Regressive Transformers – for summarization) and RoBERTa (A Robustly Optimized BERT Pretraining Approach – for classification) to do sentiment analysis and create clusters of user reviews based on sentiment analysis. Third step is to use LDA (Latent Dirichlet allocation) to uncover common topics and themes for each of the clusters. In the last step I pass on the LDA analysis to a Large Language Model (gpt-4-0125-preview and Mistral Medium) with prompt instruction to generate the final industry analysis report.

Following diagram shows the high level workflow:

You can check out the code on github.

Now let’s dive deeper into each of these steps.

Step #1 Data Preparation and Exploratory Data Analysis

For this analysis, I am using Airline review data from Kaggle. This data set is available for public use under open data commons license.

Exploratory Data Analysis (EDA) is a foundational step in data processing and model building. Through EDA we develop a deeper understanding of the data itself — variable types and distributions, any inconsistencies and errors needing data cleaning and preprocessing.

This then helps in selecting significant features for follow up model building. EDA also influences the choice of the most suitable models and algorithms for a more accurate and efficient modeling process.

I used pandas and ProfileReport from ydata_profiling to do a quick EDA. Code for EDA is available of Github.

Overall the dataset contained 20 columns, and each of them will offer some insights into analysis of customer’s travel experience.

<class 'pandas.core.frame.DataFrame'> RangeIndex: 23171 entries, 0 to 23170 Data columns (total 20 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Unnamed: 0 23171 non-null int64 1 Airline Name 23171 non-null object 2 Overall_Rating 23171 non-null object 3 Review_Title 23171 non-null object 4 Review Date 23171 non-null object 5 Verified 23171 non-null bool 6 Review 23171 non-null object 7 Aircraft 7129 non-null object 8 Type Of Traveler 19433 non-null object 9 Seat Type 22075 non-null object 10 Route 19343 non-null object 11 Date Flown 19417 non-null object 12 Seat Comfort 19016 non-null float64 13 Cabin Staff Service 18911 non-null float64 14 Food & Beverages 14500 non-null float64 15 Ground Service 18378 non-null float64 16 Inflight Entertainment 10829 non-null float64 17 Wifi & Connectivity 5920 non-null float64 18 Value For Money 22105 non-null float64 19 Recommended 23171 non-null object dtypes: bool(1), float64(7), int64(1), object(11) memory usage: 3.4+ MB

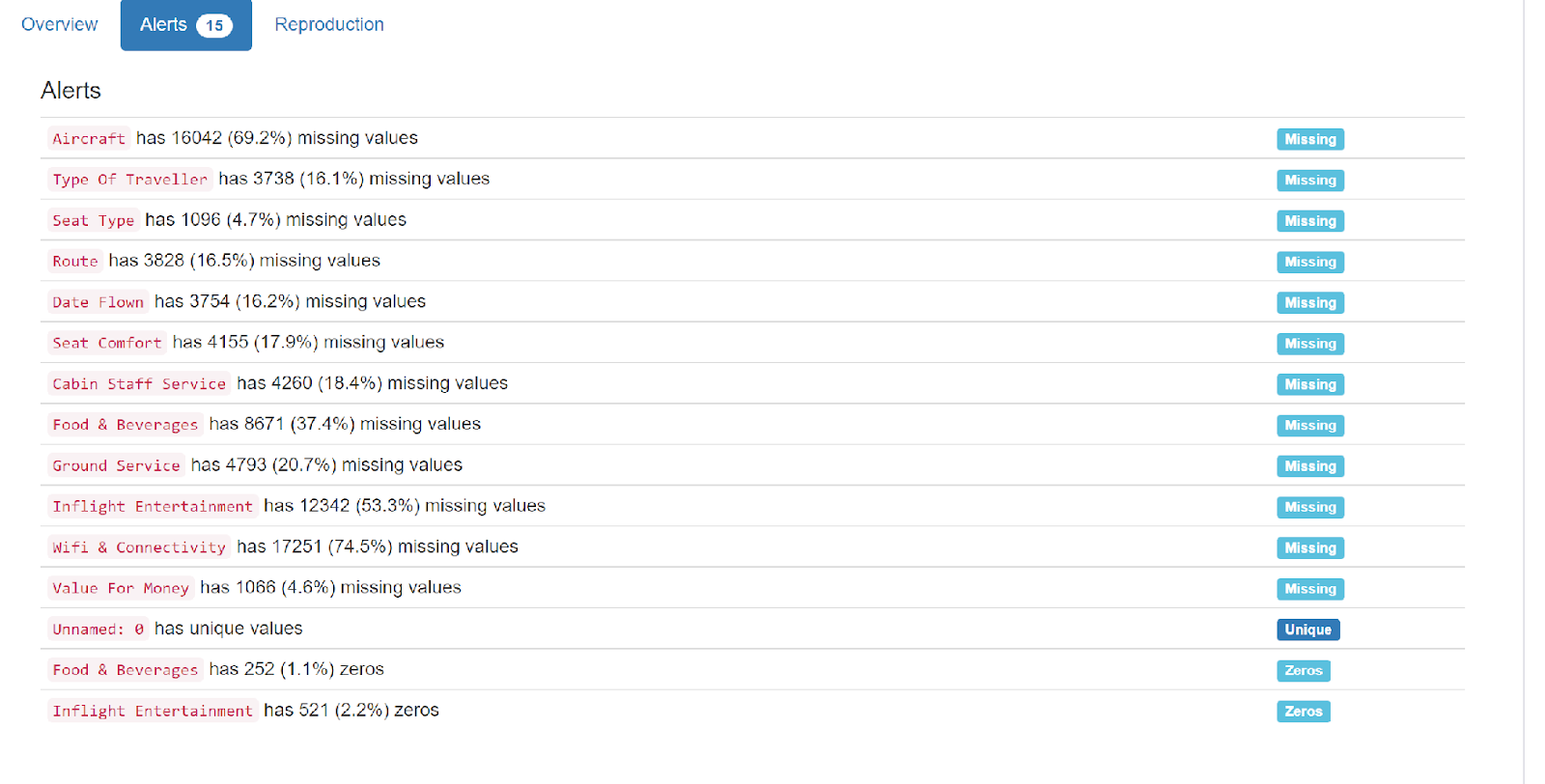

Many of the columns have a lot of data missing. This means that some of these columns will need a way to impute the data and still there are columns which are likely not very usable in modeling.

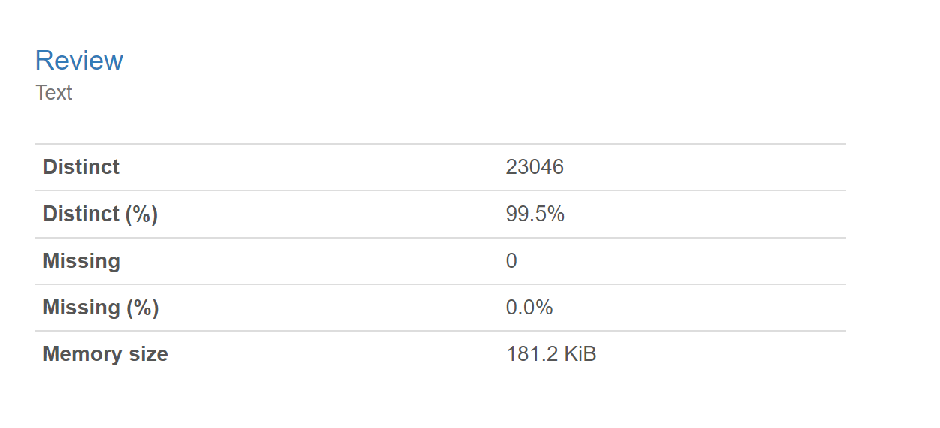

In my analysis, I will be focusing on just the review column. The good thing about this columns is that none of the values in this column is missing.

I think a good follow up analysis will be to find correlation with airline, seat type, or type of traveler, but in this post I am focusing on overall Airline Industry in the US irrespective of airlines or type of travel. This is a crucial first step to develop understanding at an industry level.

Step #2 Sentiment and Cluster Analysis

The goal of this step is to process user reviews and create clusters based on user sentiment. Let’s dive deeper into how I am doing this in the project:

Sentiment Analysis:

For sentiment analysis I am using RoBERTa (A Robustly Optimized BERT Pretraining Approach) based fine tuned model cardiffnlp/twitter-roberta-base-sentiment-latest model from Hugging Face.

RoBERTa is an optimized version of BERT (Bidirectional Encoder Representations from Transformers), designed for more robust performance. It is trained on a much larger dataset and for a longer time than BERT. RoBERTa reads a sentence or a piece of text in its entirety, rather than in parts, allowing it to understand the context better. This context understanding is crucial for accurately determining sentiment. It can distinguish between a genuinely positive review and a sarcastically positive one, a nuance that simpler models might miss.

The model by default classifies sentiment into Negative, Neutral, and Positive but I used the score value returned by the model to categorize the sentiments into five clusters of Strongly Negative, Negative, Neutral, Positive, and Strongly Positive. This finer grain sentiment analysis helps me in getting a more nuanced understanding of emotions in the reviews, which can lead to more accurate insights, especially useful for tailoring products, or services. The key is to identify the most painful and prevalent issues and then tailor an offering to alleviate those pain points.

Summarization:

The challenge with RoBERTa based models is that they have a token limit of 512 and a lot of these user reviews are larger than 2,000 token length. To overcome this challenge, I am using a BART (Bidirectional and Auto-Regressive Transformers) model to summarize the content and then processing it with RoBERTa for sentiment analysis.

BART is useful to condense large volumes of text into shorter forms while retaining the sentiment expressed in the original text. BART combines both encoder and decoder architectures. The encoder reads and processes the input text, understanding its context and nuances. The decoder then generates a summary or output. For this task I am using facebook/bart-large-cnn from Hugging Face. The model is fairly well rated and is fine tuned on CNN Daily Mail which I thought could be useful in understanding and summarizing customer reviews for air travel.

The issue I ran into when using this BART model is that it has a token limit of 1024 and a lot of these user reviews are larger than 2,000 token length. To overcome this challenge, I am using recursive chunk based summarization to bring down the user review to less than 512 tokens before processing for sentiment analysis while maintaining as much nuance and context as possible. This was inspired by the recursive chunking and splitting method in LangChain.

Here’s how it works:

The first step is to tokenize user reviews. If a review exceeds the token limit for RoBERTa’s, they are summarized using BART before processing for sentiment analysis. However if a review exceeds the token limit for BART, it’s chunked and summarized using BART. This step involves chunking, recursive processing, and concatenation, ensuring that the essence and context of each review are preserved for accurate sentiment analysis. When a review exceeds the token limit, it’s first broken down into smaller, overlapping segments. Each segment is then individually summarized using the BART model. These summarized chunks are concatenated to form a comprehensive yet concise version of the original review. If this concatenated summary still exceeds the token limit, the process becomes recursive – the summary itself is chunked and summarized again. This recursive chunking and summarization approach adeptly navigates around the token limitations, ensuring no vital information is lost. By doing so, it allows for a more nuanced and accurate sentiment analysis of lengthy customer reviews, which would otherwise be truncated or overly simplified, leading to potential misinterpretation of customer sentiments.

Code for sentiment analysis:

You can check out the full code on github.

sentiment_analysis_roberta.py

import json

from transformers import pipeline

from review_large_token_length import token_length_review

from transformers import AutoTokenizer, AutoModelForSequenceClassification

# Load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(

"cardiffnlp/twitter-roberta-base-sentiment-latest"

)

model = AutoModelForSequenceClassification.from_pretrained(

"cardiffnlp/twitter-roberta-base-sentiment-latest"

)

# Load RoBERTa model for sentiment analysis

# classifier = pipeline('sentiment-analysis', model='roberta-base')

classifier = pipeline(

"sentiment-analysis", model="cardiffnlp/twitter-roberta-base-sentiment-latest"

)

def process_reviews_sentiment_analyzer(base_path, input_filename, output_filename):

# Define a threshold for strongly positive or strongly negative as 66% confidence level - top 1/3 categorized as strong

strong_threshold = 0.66

# Read JSON file

with open(f"{base_path}/{input_filename}", "r") as file:

data = json.load(file)

# Perform sentiment analysis and update data

sentiment = []

for airline, reviews in data.items():

for review in reviews:

adjusted_review = token_length_review(review["Review"])

result = classifier(adjusted_review)

# print("sentiment result: ", result)

score = result[0]["score"]

label = result[0]["label"]

# Logic for enhance sentiment classification

if label == "positive":

if score > (strong_threshold):

sentiment = "strongly positive"

else:

sentiment = "positive"

elif label == "negative":

if score > (strong_threshold):

sentiment = "strongly negative"

else:

sentiment = "negative"

else:

sentiment = "neutral"

# Add sentiment result to the review data

review["Sentiment"] = sentiment

# print("Sentiment Assigned: ", sentiment)

# Write updated data to a new JSON file

with open(f"{base_path}/{output_filename}", "w") as file:

json.dump(data, file, indent=4)

base_path = "../../eda/kaggle/data"

input_filename = "airlines_with_30_plus_reviews_cleaned_1.json"

output_filename = "airlines_with_30_plus_reviews_cleaned_2.json"

process_reviews_sentiment_analyzer(base_path, input_filename, output_filename)

review_large_token_length.py

from transformers import RobertaTokenizer

from transformers import AutoTokenizer, AutoModelForSequenceClassification

from summarization_bart_large_cnn import summarize_text

from split_and_summarize_recursively import chunk_and_summarize_very_large_review

# Load tokenizer

# tokenizer = RobertaTokenizer.from_pretrained('roberta-base')

tokenizer = AutoTokenizer.from_pretrained(

"cardiffnlp/twitter-roberta-base-sentiment-latest"

)

def token_length_review(review):

# convert to lower case for processing

review = review.lower()

# Tokenize the review

tokens = tokenizer.encode(review, add_special_tokens=True)

token_length = len(tokens)

print("token_length: ", token_length)

# print("tokens: ", tokens)

# Check token length for bart summarization

if token_length > 1000:

# If review is too long, first chunk and summarize to get below 1000 tokens so that it can be processed using bart-large-cnn

review = chunk_and_summarize_very_large_review(review)

token_length = len(tokenizer.encode(review, add_special_tokens=True))

# Check token length for roberta sentiment analysis

if token_length > 510:

# Summarize long reviews

return summarize_text(review)

else:

# Return original review for shorter reviews

return review

split_and_summarize_recursively.py

from transformers import AutoTokenizer, pipeline, RobertaTokenizer

from summarization_bart_large_cnn import summarize_text

tokenizer = AutoTokenizer.from_pretrained(

"cardiffnlp/twitter-roberta-base-sentiment-latest"

)

def chunk_and_summarize_very_large_review(very_large_review):

tokens = tokenizer.encode(very_large_review, add_special_tokens=True)

token_length = len(tokens)

# print("token_length: ", token_length)

chunk_size = 750 # Number of tokens in each chunk (in between 500 and 1000)

overlap = 150 # Number of tokens for overlap (20%)

chunks = []

start_token = 0

# Split the text into overlapping chunks

while start_token < token_length:

end_token = min(start_token + chunk_size, token_length)

# print(

# "start: ", start_token, "end: ", end_token, "token_length: ", token_length

# )

chunk_tokens = tokens[start_token:end_token]

# print("chunk_tokens: ", chunk_tokens)

chunk = tokenizer.decode(chunk_tokens)

# print("chunk: ", chunk)

summarized_chunk = summarize_text(chunk)

# print("summarized_chunk:", summarized_chunk)

chunks.append(summarized_chunk)

# print("chunks:", chunks)

start_token = end_token - overlap if end_token < token_length else end_token

# Concatenate all the summarized chunks into a final string

final_review_summary = " ".join(chunks)

# print("final_review_summary:", final_review_summary)

final_review_summary_len = len(

tokenizer.encode(final_review_summary, add_special_tokens=True)

)

# print("token length of final_review_summary: ", final_review_summary_len)

# Recursive call if the summary is still too long

if final_review_summary_len > 1000:

# print("Recursive Call")

return chunk_and_summarize_very_large_review(final_review_summary)

return final_review_summary

summarization_bart_large_cnn.py

from transformers import pipeline

summarizer = pipeline("summarization", model="facebook/bart-large-cnn")

def summarize_text(article, max_length=500, min_length=400):

# max length 500 to keep it less than bert's token limit, min length 400 to not summarize aggressively..

# print("In summarize_text")

# Summarize the article

summarized = summarizer(

article, max_length=max_length, min_length=min_length, do_sample=False

)

summary_article = summarized[0]["summary_text"]

# print("summary_article after summarize_text:", summary_article)

return summary_article

Step #3 Identifying Themes and Topics for each sentiment cluster

Now we have classified each of the user reviews into their respective sentiment and created 5 sentiment clusters. The next step is to identify prevalent themes for each of these sentiment clusters. To achieve this I am using Latent Dirichlet Allocation (LDA) to identify different themes and topics for each of these sentiment clusters. This will set us up for the next and last step of creating an industry analysis report covering opportunities for disruption and innovation.

Latent Dirichlet Allocation (LDA) is a type of probabilistic model used for uncovering the underlying topics from large collections of text. It works by assuming each document is a mixture of various topics and each topic is characterized by a distribution of words. LDA tries to backtrack from the documents to find a set of topics that are likely to have generated the collection. LDA is especially good at handling large volumes of texts and discovering topics that might not be apparent through manual inspection. Since this is an unsupervised learning model It doesn’t require pre-labeled training data which is also very useful for this analysis.

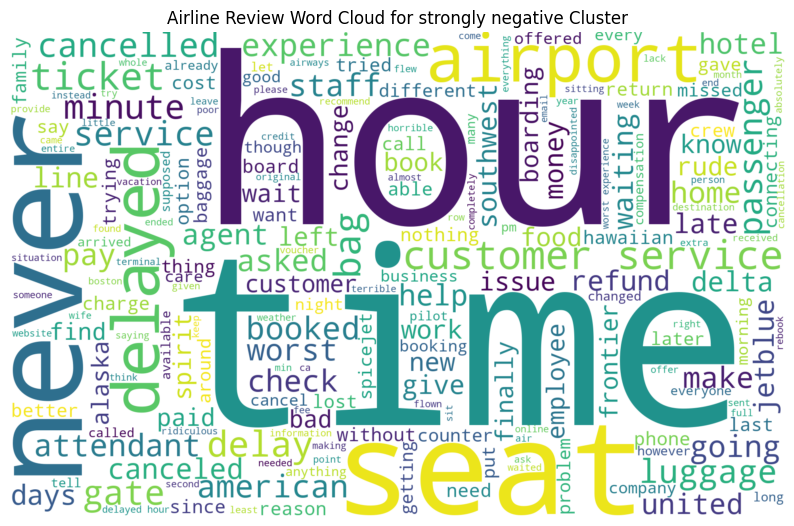

In the past, I have performed theme identification using WordCloud but using LDA (Latent Dirichlet Allocation) instead of a WordCloud for identifying themes and topics is advantageous. LDA offers a more sophisticated analysis by uncovering the latent topics. Unlike WordCloud, which only shows the frequency of words, LDA provides context, showing how words group together in topics. This leads to a deeper understanding of the underlying themes in the text. LDA’s probabilistic approach can differentiate between overlapping topics, offering a clearer and more nuanced insight than the more simplistic, visual representation of a word cloud.

Although the pictorial output of WordCloud is very useful in visual interpretation, it relies on human interpretation to identify themes and topics, which can be subjective and inconsistent. This subjectivity can be overcome by using LDA (Latent Dirichlet Allocation). LDA offers a more objective and systematic approach to topic identification, reducing the potential biases and inconsistencies associated with visually interpreting word clouds.

Lastly it seems much more efficient to pass output of LDA to an LLM than passing WordCloud to LLM for generating industry analysis reports.

There is still value in creating WordCloud to develop intuition so we can validate the report generated by LLM.

Let’s look at word cloud for strongly negative sentiment:

Some of the words that jump out are – Time related (time, hour, minute, delayed, waiting), Flight Cancel related (I think refund and hotel are likely cancelation related), poor service experience (staff, onboarding, seat), and Luggage related. This helps us understand that flight delays/cancellation, poor service, unhelpful staff, and baggage handling are prominent issues leading to a very poor sentiment for the customers. From my personal experience this makes sense. Let’s keep this in mind when we review the industry analysis report generated by the LLM.

Code for LDA:

You can check out the full code on github.

us_airline_review_lda.py

import json

import pandas as pd

import nltk

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from gensim.corpora.dictionary import Dictionary

from gensim.models.ldamodel import LdaModel

nltk.download("stopwords")

nltk.download("punkt")

stop_words = set(stopwords.words("english"))

custom_stop_words = [

"us",

"get",

"would",

"could",

"back",

"got",

"go",

"use",

"take",

"went",

"also",

]

stop_words.update(custom_stop_words)

def preprocess_text(text):

tokens = word_tokenize(text.lower())

return [w for w in tokens if w.isalpha() and w not in stop_words]

def generate_lda_summary(df, sentiments):

summary = ""

for sentiment in sentiments:

sentiment_df = df[df["Sentiment"] == sentiment]

if sentiment_df.empty:

summary += f"\nNo reviews for sentiment: {sentiment}\n"

continue

# Create dictionary and corpus for LDA

dictionary = Dictionary(sentiment_df["processed_review"])

corpus = [dictionary.doc2bow(text) for text in sentiment_df["processed_review"]]

# LDA model

num_topics = 6

lda_model = LdaModel(

corpus, num_topics=num_topics, id2word=dictionary, passes=20, random_state=0

)

# Append the topics for the sentiment to the summary

summary += f"\nTopics for sentiment: {sentiment}\n"

for idx, topic in lda_model.show_topics(

formatted=True, num_topics=num_topics, num_words=20

):

summary += f"Topic {idx}:\n{topic}\n"

return summary.strip()

def generate_lda_summary_wrapper(input_file):

# Load data

with open(input_file, "r") as file:

data = json.load(file)

# Convert to DataFrame

df = pd.DataFrame(data)

# Text Preprocessing

df["processed_review"] = df["Review"].apply(preprocess_text)

# Sentiment categories

sentiments = [

"strongly negative",

"negative",

"neutral",

"positive",

"strongly positive",

]

lda_summary = generate_lda_summary(df, sentiments)

return lda_summary

Step #4 Utilizing Large Language Models for perform SWOT Analysis, Analyze opportunities for innovation and disruption, Apply Blue Ocean Strategy Framework and Formulate Hypotheses for Further Validation

In this step I am using a Large Language Model (LLM) to process the output of Latent Dirichlet Allocation (LDA) analysis for generating an Industry Analysis report along with a Blue Ocean Strategy report with opportunities for disruption. This is not a trivial task, therefore I was looking for following qualities in the LLM:

- Model Size, Capacity and Understanding: I am looking for a LLM that has enough context length, capacity to understand complex business strategies and can interpret LDA outputs accurately. The model should handle abstract concepts, infer from data trends, and offer strategic insights.

- Data Interpretation Skills: The LLM should be able to interpret LDA outputs, which involves understanding topic distributions and the significance of keywords in various contexts.

- Knowledge of Business Strategies: The LLM must have a comprehensive understanding of various business strategies, especially the Blue Ocean Strategy framework. It should be able to contextualize LDA findings within this framework.

- Customization and Output Requirements: I needed a model that can create the strategy report in a format I wanted with zero shot prompting so that I don’t have to fine tune the model or provide examples for few shot prompting. The model should seamlessly integrate LDA outputs and generate a well structured report without extensive manual intervention.

Based on these factors, I chose OpenAI’s GPT 4 and Mistral for this task. I was planning to use LLAMA2 as well however it has a smaller context length so I dropped that idea for the time being.

Industry Analysis Report and Observations on the report

Using Open AI ChatGPT as LLM (gpt-4-0125-preview):

Code for OpenAI:

openai_lda_post_processing.py

from openai import OpenAI

import requests

import json

import dotenv

import os

import re

import datetime

from data_analysis.us_airline_review_lda import generate_lda_summary_wrapper

dotenv.load_dotenv()

OPENAI_API_KEY = os.environ.get("OPENAI_API_KEY2")

client = OpenAI(api_key=OPENAI_API_KEY)

# input file:

base_path = "../../eda/kaggle/data"

input_filename = "us_airline_reviews_with_sentiment_analysis.json"

input_file = os.path.join(base_path, input_filename)

# output file:

current_datetime = datetime.datetime.now()

formatted_datetime = current_datetime.strftime("%Y%m%d_%H%M%S")

base_output_path = "../../eda/kaggle/data/industry_report"

output_filename = f"us_airline_industry_analysis_openai_{formatted_datetime}.txt"

output_file = os.path.join(base_output_path, output_filename)

prompt_augmentation_file = "prompt_instructions_fewshots.json"

with open(prompt_augmentation_file, "r") as file:

prompt_augmentation = json.load(file)

prompt_instruction = prompt_augmentation["openai_base_augmentation"]

def format_response(api_response):

formatted_response = api_response.replace("\\n", "\n")

formatted_response = re.sub(r"###\s*", "", formatted_response)

formatted_response = re.sub(r"\*\*", "", formatted_response)

formatted_response = re.sub(r"-\s\*\*", "", formatted_response)

return formatted_response

def openai_generate_insights_from_cluster_lda(user_query):

try:

openai_response = client.chat.completions.create(

model="gpt-4-0125-preview",

messages=[

{

"role": "system",

"content": prompt_instruction,

},

{"role": "user", "content": user_query},

],

temperature=0.75,

)

# print("full response:", openai_response, "\n")

industry_report = openai_response.choices[0].message.content

# print("Industry Analysis report before formatting:", industry_report, "\n")

industry_report_formatted = format_response(industry_report)

return industry_report_formatted

except Exception as e:

print("Error during API call:", e)

return "An error occurred while generating insights."

lda_output = generate_lda_summary_wrapper(input_file)

print("LDA Output: ", lda_output)

generated_insights = openai_generate_insights_from_cluster_lda(lda_output)

print("Industry Analysis Report:\n", generated_insights)

# Write the Industry Analysis Report to a text file

with open(output_file, 'w') as file:

file.write("LDA Output: \n" + lda_output + "\n\n")

file.write("Industry Analysis Report: \n" + generated_insights)

OpenAI Output:

Industry Analysis Report:

Latent Dirichlet Allocation (LDA) Analysis Summary

The LDA analysis of airline user reviews, categorized by sentiments from ‘strongly negative’ to ‘strongly positive’, reveals distinct themes central to passenger experiences across the sentiment spectrum. Overall findings indicate that delays, service quality, seat comfort, and staff behavior are pivotal factors influencing customer sentiment. The analysis underscores the importance of timely flights, responsive and courteous service, and comfortable seating arrangements in shaping passenger perceptions.

Findings by Sentiment Cluster

- Strongly Negative: Delays, poor service, and unhelpful staff are dominant themes. Issues such as baggage handling and check-in problems are also highlighted.

- Negative: Similar to strongly negative sentiments, delays and service quality issues persist, with additional emphasis on problems at the gate and with boarding processes.

- Neutral: Discussions pivot around operational aspects like gate changes and boarding, with a mix of positive and negative experiences without extreme sentiments.

- Positive: Positive experiences often relate to efficient boarding, punctuality, and satisfactory in-flight services including food and entertainment.

- Strongly Positive: Exceptional service quality, staff friendliness, and overall good flight experiences, including comfort and punctuality, are emphasized.

SWOT Analysis Based on LDA Findings

- Strengths: When airlines excel, it is often due to punctuality, efficient service, and positive staff interactions, leading to strong positive sentiments.

- Weaknesses: Delays, poor customer service, and uncomfortable seating arrangements are repeatedly criticized, directly impacting negative perceptions.

- Opportunities: Enhancing digital experiences for booking and check-ins, improving on-time performance, and investing in staff training could improve customer satisfaction.

- Threats: Consistent negative experiences related to delays and service can lead to brand erosion and customer churn to competitors.

Opportunities for Market Disruption

The recurrent themes of dissatisfaction offer opportunities for disruption in areas such as improving operational efficiency to reduce delays, reimagining customer service with a focus on digital engagement, and redefining comfort in seating and overall in-flight experience.

Applying Blue Ocean Strategy

To create a new market space, airlines should consider the following:

- Prioritize: Investment in punctuality and operational efficiency alongside digital transformation for customer engagement.

- Reduce: Focus less on ancillary charges that aggravate customers, such as baggage fees or seat selection charges, to improve perceived value.

- Eliminate: Outdated practices such as cumbersome check-in processes or boarding methods that contribute to delays and customer frustration.

- Create: Innovations in seat comfort, personalization of service, and leveraging technology to enhance customer experience from booking to arrival.

Hypothesis Tests to Validate Findings

- Delays vs. Sentiment:

- H0: Flight delays have no effect on customer sentiment.

- Ha: Flight delays negatively affect customer sentiment.

- Service Quality vs. Sentiment:

- H0: Service quality has no effect on customer sentiment.

- Ha: Higher service quality leads to more positive customer sentiment.

- Seat Comfort vs. Sentiment:

- H0: Seat comfort has no effect on customer sentiment.

- Ha: Improved seat comfort leads to more positive customer sentiment.

Summary and Conclusion

The LDA analysis of airline reviews provides insightful data into the factors that significantly influence passenger satisfaction across different sentiment levels. By addressing the identified weaknesses and threats through strategic measures—prioritizing operational efficiency, reducing unnecessary charges, eliminating outdated practices, and creating innovative service experiences—airlines can disrupt the market effectively. Adopting a Blue Ocean Strategy, focusing on unmet and underserved needs, can help airlines differentiate themselves in a competitive landscape. The proposed hypothesis tests offer a methodical approach to validating these findings and guiding strategic decisions to enhance customer satisfaction and loyalty in the airline industry.

Observations on ChatGPT’s Output:

I think analysis is really good. It highlights areas such as flight delay and poor service as major source of customer dissatisfaction and punctuality, service quality, and staff friendliness as major factors for positive customer experience. Hypothesis tests are extremely helpful.

However, the analysis overlooked factors such as baggage handling and flight cancellation contributing to bad sentiment. The SWOT analysis for opportunity seems very generic. The blue ocean strategy’s focus on digital transformation makes it sound like a report full of buzzwords.

Overall, the report is still very insightful and useful. With a bit of human review and refinement this could be a great starting point for business model innovation strategy.

Using Mistral as LLM:

Code for Mistral

mistral_lda_post_processing.py

import requests

import json

import dotenv

import os

import datetime

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

from pydantic.json import pydantic_encoder

from data_analysis.us_airline_review_lda import generate_lda_summary_wrapper

dotenv.load_dotenv()

MISTRAL_API_KEY = os.environ.get("MISTRAL_API_KEY")

model_mistral_tiny = "mistral-tiny" # Mistral-7B-v0.2

model_mistral_small = "mistral-small" # Mixtral-8X7B-v0.1

model_mistral_medium = "mistral-medium" # internal prototype model.

model = model_mistral_medium

client = MistralClient(api_key=MISTRAL_API_KEY)

# input file:

base_path = "../../eda/kaggle/data"

input_filename = "us_airline_reviews_with_sentiment_analysis.json"

input_file = os.path.join(base_path, input_filename)

# output file:

current_datetime = datetime.datetime.now()

formatted_datetime = current_datetime.strftime("%Y%m%d_%H%M%S")

base_output_path = "../../eda/kaggle/data/industry_report"

output_filename = f"us_airline_industry_analysis_mistral_{formatted_datetime}.txt"

output_file = os.path.join(base_output_path, output_filename)

# Get prompt instruction

prompt_augmentation_file = "prompt_instructions_fewshots.json"

with open(prompt_augmentation_file, "r") as file:

prompt_augmentation = json.load(file)

prompt_instruction = prompt_augmentation["mistral_base_augmentation"]

def mistral_generate_insights_from_cluster_lda(user_query):

# augmented_query_with_instruction = prompt_instruction + user_query

# print("augmented_query_with_instruction: ", augmented_query_with_instruction)

# messages = [ChatMessage(role="user", content=augmented_query_with_instruction)]

system_message = ChatMessage(role="system", content=prompt_instruction)

user_message = ChatMessage(role="user", content=user_query)

messages = [system_message, user_message]

mistral_response = client.chat(model=model, messages=messages, temperature=0.55)

# print("complete response ", mistral_response, "\n\n")

# Convert the response to JSON format

mistral_response_json = json.dumps(mistral_response, default=pydantic_encoder)

# Parse the JSON string

mistral_response_parsed = json.loads(mistral_response_json)

# Access the message content

try:

mistral_response_content = mistral_response_parsed["choices"][0]["message"][

"content"

]

return mistral_response_content

except (KeyError, TypeError) as e:

error_message = f"Error parsing the response: {e}"

print(error_message)

return error_message

lda_output = generate_lda_summary_wrapper(input_file)

print("LDA Output: ", lda_output)

generated_insights = mistral_generate_insights_from_cluster_lda(lda_output)

print("Industry Analysis Report: \n", generated_insights)

# Write the Industry Analysis Report to a text file

with open(output_file, 'w') as file:

file.write("LDA Output: \n" + lda_output + "\n\n")

file.write("Industry Analysis Report: \n" + generated_insights)

Mistral Output:

Industry Analysis Report:

The LDA analysis of airline user reviews reveals several key findings:

Overall findings:

The most common topics across all sentiments involve flight delays, poor customer service, and issues with baggage handling and seating. The strongest negative sentiment topics revolve around flight delays and cancellations, while the strongest positive sentiment topics involve comfortable seating, good service, and on-time flights.

Sentiment cluster findings:

- Strongly negative: Customers are highly dissatisfied with flight delays, poor customer service, and issues with baggage handling.

- Negative: Complaints center on flight delays, poor communication, and uncomfortable seating.

- Neutral: Reviews are mixed, with some customers satisfied with their flights and others expressing minor complaints or concerns.

- Positive: Customers are pleased with on-time flights, comfortable seating, and friendly staff.

- Strongly positive: Passengers express high levels of satisfaction with their overall flight experience, including comfortable seating, good service, and on-time performance.

SWOT analysis:

- Strengths: Airlines that consistently deliver on-time flights and provide comfortable seating and good service can build a strong reputation and customer loyalty.

- Weaknesses: Frequent flight delays, poor customer service, and issues with baggage handling can lead to negative reviews and damage to an airline’s reputation.

- Opportunities: Airlines can differentiate themselves by offering superior customer service, comfortable seating, and reliable on-time performance.

- Threats: Competition from low-cost carriers, increasing fuel prices, and the potential for negative publicity from customer complaints can pose challenges to airlines.

Market disruption opportunities:

Airlines can disrupt the market by offering unique value propositions such as superior in-flight entertainment, enhanced loyalty programs, and innovative pricing strategies.

Blue Ocean Strategy:

To create new market space, airlines should prioritize services that differentiate them from competitors, such as superior in-flight entertainment and enhanced loyalty programs. They should reduce or eliminate services that do not add significant value to the customer experience, such as excessive fees for baggage and seat selection.

Hypothesis tests:

- T-test:

- H0: There is no difference in the average satisfaction ratings between customers who experienced flight delays and those who did not.

- Ha: There is a difference in the average satisfaction ratings between customers who experienced flight delays and those who did not.

- Chi-square test:

- H0: There is no association between the sentiment of a review and the topic of the review.

- Ha: There is an association between the sentiment of a review and the topic of the review.

- ANOVA:

- H0: There is no difference in the average satisfaction ratings between different airlines.

- Ha: There is a difference in the average satisfaction ratings between different airlines.

In conclusion, the LDA analysis of airline user reviews reveals several key insights into customer satisfaction and areas for improvement. Airlines can differentiate themselves by offering superior customer service, comfortable seating, and reliable on-time performance. They can also disrupt the market by offering unique value propositions such as superior in-flight entertainment and enhanced loyalty programs. To create new market space, airlines should prioritize services that differentiate them from competitors and eliminate services that do not add significant value to the customer experience. Hypothesis tests such as t-tests, chi-square tests, and ANOVA can be used to validate these findings and inform strategic decision-making.

Observations on Mistral’s Output:

I prefer the overall findings and sentiment cluster findings section of Mistral’s report. It clearly highlights flight cancellation, and baggage handling as factors which contribute to negative customer experience. The hypothesis tests are well structures and exhaustive.

However, sections such as SWOT analysis, market disruption opportunities, and blue ocean strategies are overly generic and misses the mark. Creating unique value propositions such as superior in-flight entertainment, enhanced loyalty programs, and innovative pricing strategies is a very inferior recommendation.

Overall, the report is still very insightful and useful. With a bit of human review and refinement this could be a great starting point for business model innovation strategy.

How to use these insights to drive innovation

As you can see the strategy generated using this process although is not perfect but is really good. I am no airline industry expert but the pain points and opportunities for improvement highlighted in the report seems accurate to me based on my experience as a consumer. The process discussed in this blog can be used in user research, and industry analysis. This process will help a researcher speed up the analysis, unearth hidden insights, and alleviate any biases.

Overall, the insights garnered from this process using sentiment analysis, LDA and LLM offer a rich foundation for driving disruptive innovation. Businesses can harness these insights to tailor products and services to meet underserved customer’s needs.

For instance, a new airline (or even existing one) could innovate in areas like – on time flights, prevention of loss of luggage, and minimal flight cancellations and differentiate itself from other airlines. Imagine an airline with a unique value proposition of best in industry — on time flights, fewest flight cancelation and lowest loss of luggage rate (and if any of these conditions were to occur the airline handles it with care and empathy). This will be a very attractive value proposition for a large segment of air travelers. Such targeted improvements not only enhance the customer experience but can also provide a competitive differentiation.

This process of turning data into actionable innovation strategy can significantly disrupt existing market paradigms, and help in carving out new opportunities for growth and differentiation.

Closing Thoughts

By analyzing customer sentiments and themes, businesses can pinpoint critical areas for innovation. This methodology is not limited to analyzing customer reviews but can also be applied to wide variety of use cases and scenarios. For example an organizations with large corpus of data, which can be classified, clustered, and thematically analyzed, can use this process to develop deeper insights into their business operations and customers. This deeper insights can help trigger incremental or disruptive innovation.

Machine learning has immense potential in helping organizations uncover new insights and drive innovation.

References, Inspirations & Further Reading:

- Kaggle Airline Review Data Set: https://www.kaggle.com/datasets/juhibhojani/airline-reviews

- The innovator’s Dilemma by Clayton Christensen

- Blue Ocean Strategy

- Seizing the White Space: Business Model Innovation for Growth and Renewal by Mark W. Johnson

- Competitive Advantage: Creating and Sustaining Superior Performance and Competitive Strategy: Techniques for Analyzing Industries and Competitors by Michael E. Porter

- University of Southern California Marshall School of Business MBA Classes:

- MOR 564 – Strategic Innovation – Creating new markets by Prof. Violina Rindova